Journals

2024.09.26

Improved performance for generative AI. What you need to know about the real capabilities of "communication semiconductor devices"

- #Focus

- #Business

- #Interview

- #R&D

- #Semiconductors

- Home

- Our Stories

- Article list

- Improved performance for generative AI. What you need to know about the real capabilities of "communication semiconductor devices"

Generative AI is steadily working its way into our work and everyday lives. But have you heard of the communication semiconductor devices fueling the spread and development of AI systems?

Mitsubishi Electric is a manufacturer of compound semiconductor devices. The company has received a flood of inquiries from hyperscalers* in recent years.

Such companies look to Mitsubishi Electric’s optical devices and similar products to improve communication infrastructure in their data centers — the backbone of AI performance.

*Hyperscalers: cloud computing companies operating enormous, "hyperscale" data centers that can utilize servers by the thousands. Prominent examples include Amazon Web Services, Google Cloud, Microsoft Azure, IBM Cloud, Alibaba Cloud, and Meta.

Expectations for even wider utilization of generative AI are high. What steps are necessary to support its dissemination?

Generative AI business guidance expert Kento Kajitani met with Kenji Masuda, Group Vice President, Semiconductor & Device Group at Mitsubishi Electric, responsible for High Frequency & Optical Device Works, to discuss the matter.

Kento Kajitani (left), and Kenji Masuda from Mitsubishi Electric (right)

Kento Kajitani (left), and Kenji Masuda from Mitsubishi Electric (right)

Contents

How do you envision a society in which "humans are overpowered by AI?"

Masuda: I’ve been astonished at just how quickly generative AI has spread.

Young people at our company have already started using it, and it’s tough for me to keep up. The situation has changed a lot even in the past year.

A Mitsubishi Electric employee since 1990, Masuda began his career at the Kamakura Works, where he contributed to the design and development of laser diode modules for optical communications. Due to a business restructure, he was transferred to the predecessor organization of High Frequency & Optical Device Works. Following an assignment in Shenzhen, China as a field application engineer for Chinese sales companies before becoming head of the sales and marketing department at the head office, he then took up his current position in 2022.

A Mitsubishi Electric employee since 1990, Masuda began his career at the Kamakura Works, where he contributed to the design and development of laser diode modules for optical communications. Due to a business restructure, he was transferred to the predecessor organization of High Frequency & Optical Device Works. Following an assignment in Shenzhen, China as a field application engineer for Chinese sales companies before becoming head of the sales and marketing department at the head office, he then took up his current position in 2022.

Kajitani: The first year that generative AI entered the popular consciousness was 2023. It was also a year of intensified competition.

LLM* performance improves based the number of parameters, the amount of training data, and the amount of computation involved.

Since these are fairly easy goals to achieve with enough funding, we’ve seen a lot of entry into the market, not just from OpenAI, but from the GAFAM big tech firms and startups with huge funding behind them.

As a result, LLMs have evolved into a partial replacement for certain business functions.

*LLM: Large Language Model

Kajitani has advised more than ten companies on product strategy and advanced technologies, including generative AI. After a stint managing growth at VASILY, Inc. and freelance work in support of new business startups and growth, he developed XR/Metaverse businesses as a representative of MESON, Inc. up until August 2022. He is the author of "Seisei AI Jidai wo Kachinuku Jigyou ・ Soshiki no Tsukurikata" (How to Create a Winning Business / Organization in the Generative AI Era) and "Ichiban Yasashii Guroosu Hakku no Kyouhon" (The Easiest Growth Hack Handbook).

Kajitani has advised more than ten companies on product strategy and advanced technologies, including generative AI. After a stint managing growth at VASILY, Inc. and freelance work in support of new business startups and growth, he developed XR/Metaverse businesses as a representative of MESON, Inc. up until August 2022. He is the author of "Seisei AI Jidai wo Kachinuku Jigyou ・ Soshiki no Tsukurikata" (How to Create a Winning Business / Organization in the Generative AI Era) and "Ichiban Yasashii Guroosu Hakku no Kyouhon" (The Easiest Growth Hack Handbook).

Masuda: Which services do you actually use, Mr. Kajitani?

Kajitani: I’m using Anthropic’s Claude 3 for text generation and MidJourney for illustrating presentations and generating cover images for blogs.

For research, I rely on Gemini on a daily basis for summarizing search results, and I use Perplexity.AI when I need accurate information.

The adoption of these generative AI tools by the general public has made for a dramatic change.

Masuda: I’m sure one main reason for that is the impressive improvements in performance, but is there something else to it?

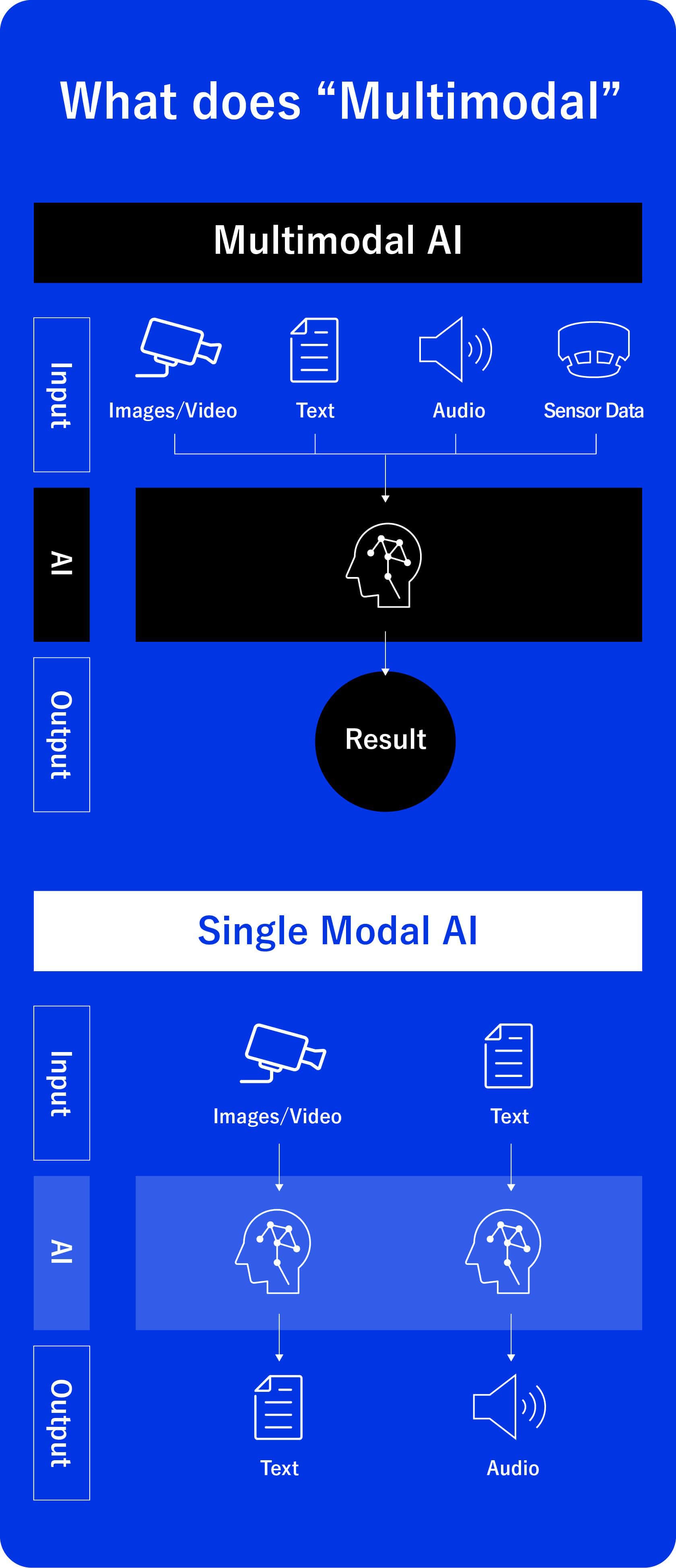

Kajitani: I think a big part of it is progress on "multimodalization:" the ability to work with multiple media types, not only text but images, audio, and video at the same time.

We can intput text and get images, or feed it video and get a summary of the content.

LLMs are improving, too. We’re at the point where we can generate program code, including apps and web services, based on written instructions.

In the very near future, anyone who wants to may be able to create an app as easily as writing a blog. That’s going to change the competitive environment for apps and web services in a huge way.

Masuda: While there’s a lot of excitement for the unimaginable evolution of AI, there are also a lot of ordinary people— like me — who are still more than a little concerned about the capacity for AI to take over our society.

Is there a sort of ideal society that you envision coming out of all this rapid AI development?

Kajitani: Well, it’s a bit of an abstract answer, but I want to see a society that doesn’t separate humans and AI.

Right now, for example, I’m talking to you to the best of my ability, but there’ll come a day when I’ll be able to pull in knowledge and intelligence from AI as I’m speaking.

The ability to share auditory and visual information with AI in real time using wearable devices is already here. In the mid- to long-term, we’re going to see more services that can integrate AI in a more natural way. We’ll see more widespread use of technologies like the direct brain implants being researched by Neuralink, founded by Elon Musk, and brain-machine interfaces (BMIs) using nanobots.

This may result in a relaxation of the meritocracy, that is currently prevalent in society.

When everyone is able to access the intelligence of AI, the difference between an IQ of 100 and an IQ of 180 will be like a rounding error. When there’s no inherent difference in ability, society may change to value humanity all the more.

Masuda: I imagine we’ll need to get past quite a few bottlenecks before AI can be integrated that fully into society. Mr. Kajitani, what type of conditions will need to exist before we see that kind of explosive expansion of AI?

Kajitani: There are really two main factors. The first one is improving of user literacy.

Many Japanese companies are hesitant to use generative AI due to security concerns. Meanwhile in the U.S., there was a survey showing that nearly 90% of U.S. enterprise companies are employing three or more LLMs. It’s a totally different attitude.

The flip side to all these available options is the number of generative AI startups in the U.S.

The core technologies behind generative AI rely on foreign firms, so domestic startups in Japan have trouble developing a medium- to long-term advantage, what we in the generative AI field call a "moat."* Even as Japanese venture capital (VC) it is seen as high risk right now.

*Advantages and barriers to entry that protect a company from competition.

We believe that we need policies in Japan that will stimulate investment, like preferential treatment for VC investment in the AI field.

Strengthening communication infrastructure: the key to spreading generative AI

Kajitani: I’d say the other major factor for expanding the use of generative AI is communication infrastructure.

As generative AI becomes multimodal, we’ll be able to, for example, analyze a sporting event in real time and provide automated commentary.

But right now, we have to wait for communication and processing. As we get closer to real-time AI applications, communication speed becomes a bottleneck.

Masuda: Here at Mitsubishi Electric, we’ve come to the same conclusion.

Expanding AI will require an upgrade in our communication infrastructure.

Over the years, Mitsubishi Electric has developed and commercialized the key technologies underlying the evolution of communication infrastructure.

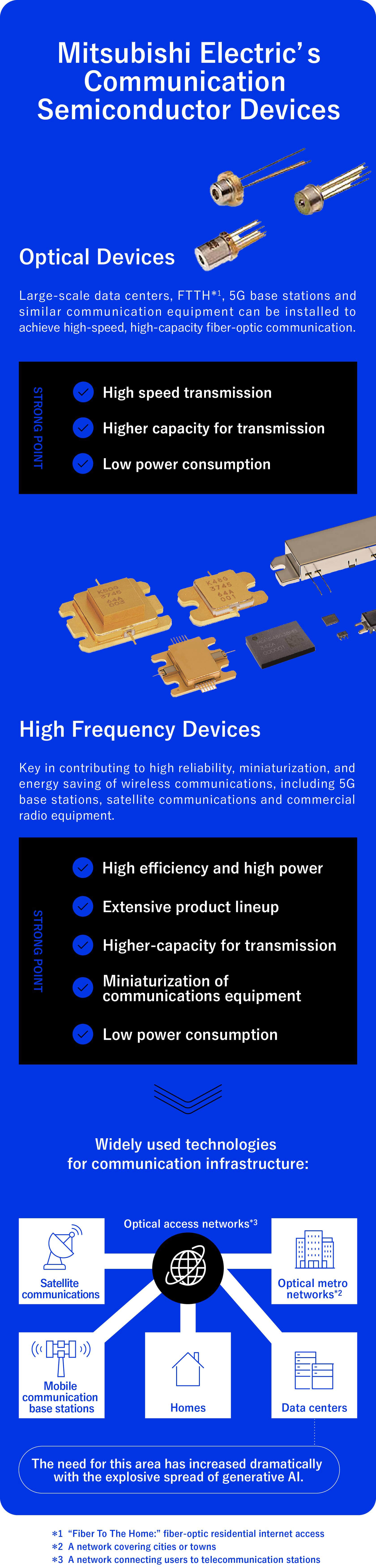

Technologies like optical devices for fiber-optic communication and high frequency devices for satellite transmission and smart phones.

Traditionally our end customers have been telecommunication carriers building networks, but generative AI has caused a dramatic change.

Demand for optical devices in particular has exploded, due to the needs of cloud-based companies, the so-called "hyperscalers."

They’re currently building data centers all over the world, and they need high-performance optical devices to improve the efficiency of generative AI in those data centers.

Kajitani: There was a time when ChatGPT needed to limit their number of users because their computing resources simply couldn’t keep up with the demand.

These days generative AI is so popular that even the hyperscalers with all their data centers can’t keep up, so demand for data centers is on the rise.

Masuda: You’re absolutely right.

Data centers achieve such high processing capacity by using high-speed optical fiber connections to link up servers equipped with high-performance CPUs and DPUs*.

In other words, the higher the performance of optical devices, the better the generative AI models perform.

Mitsubishi Electric has the top share** of the world market in optical communication devices for data centers.

*DPU: Data processing unit. A relatively new, specialized processor, capable of handling the high-volume, complex workloads demanded by machine learning and other advanced computing applications.

**: Shares is actual results of EML to data centers for FY2023, according to Mitsubishi Electric estimate.

Kajitani: But you have competitors, of course. Why are cloud services choosing Mitsubishi Electric’s optical devices?

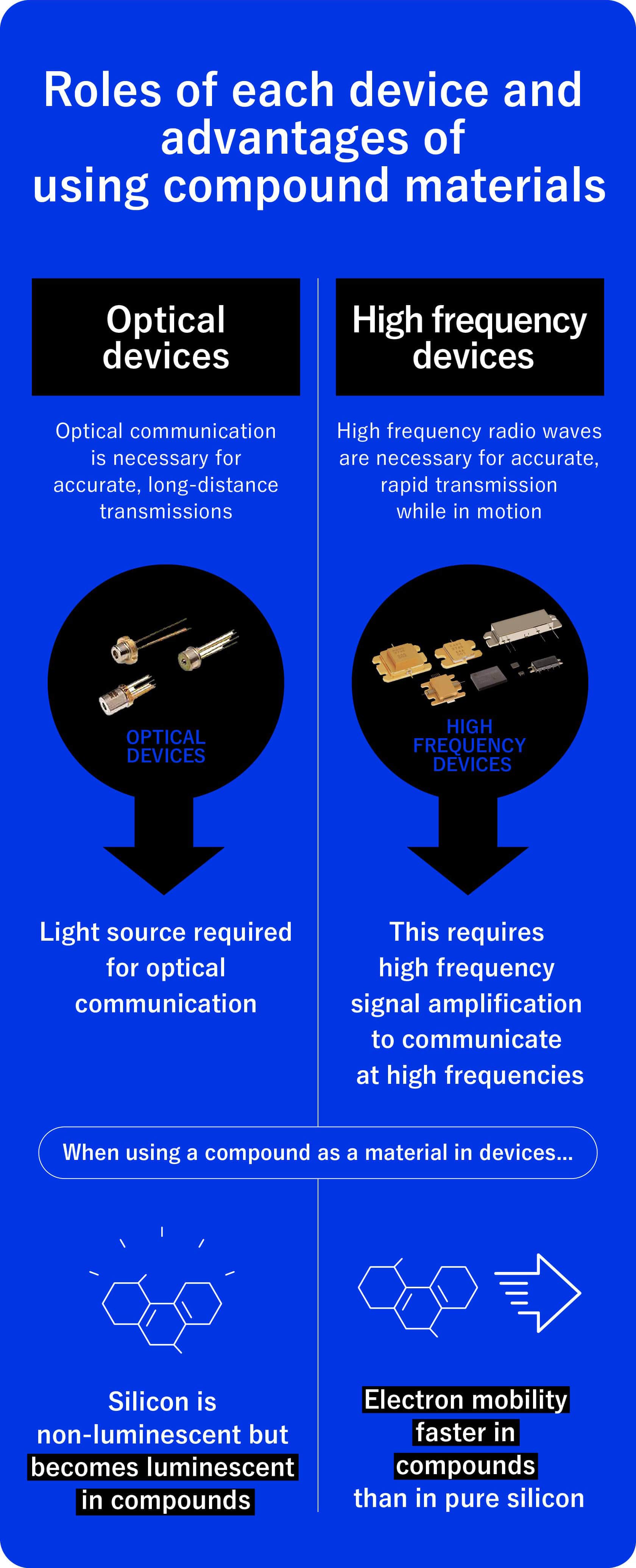

Masuda: The expertise we’ve cultivated over many years in the compound semiconductor business is a major factor, I’d say.

Compound semiconductors are a good fit for high-speed, large-volume communications, not least because of the electron mobility.

Compound semiconductors are extremely hard to make, however.

They’re a bit like an agricultural crop. It’s difficult to control the production process, and even a slight variation in conditions can result in a huge change in the final product.

We’ve been working with compound semiconductors since the 1960s, so we have the experience necessary to keep our yield rates high and provide a stable supply of uniform devices.

To use your word, this is our "moat." It would be hard for anyone else to imitate our expertise, and that’s given us a strong reputation among cloud-based companies.

To be specific, we’ve achieved excellent performance and productive capacity with EML.* It uses our proprietary structure, a hybrid integrating the light-emitting element into the modulator. As I mentioned earlier, we have a large share of the market.

*Electro-absorption Modulator- integrated Laser diode: A semiconductor laser diode integrating an electric field-absorption modulator

Kajitani: It’s interesting that there’s an analog element at the core of these digital components.

On a related note, semiconductor performance improves a lot every year. Is the same true for semiconductor devices for communication?

Masuda: The situation is a bit different for silicon-based semiconductors, which are mainly for computational processing, and compound semiconductors in communications, but we are seeing higher-than-ever demand from cloud companies for improved performance in optical devices.

When our primary customers were the telecom carriers, speeds were improving by 4 to 10 times every 5 years. Cloud companies need those kinds of performance increases in 3 years.

The reason is that residential customers are currently getting 1 to 10Gbps* in optical communication speeds, but data centers need ten or a hundred times faster performance than that.

*Gbps (Giga-bits per second): a transmission rate of 1 billion digital signals per second

We’re in direct communication with cloud companies right now to meet that demand, working on R&D for next-generation optical devices that will meet their needs.

Our power amp modules for high frequency devices for 5G* base stations have also been adopted by major base station manufacturers, which increases communication capacity and provides the communication infrastructure that is the backbone of generative AI.

We’re also hard at work developing the constituent technologies for the next generation, "Beyond 5G/6G."

*5G: 5th Generation mobile communication systems

Kajitani: It’s remarkable that a Japanese company is still coming out ahead in the highly competitive, high-investment field of semiconductor R&D.

Masuda: Compound semiconductors are a field where we can leverage the strengths of Japanese manufacturing, and one where I think we can survive.

Some of our semiconductor products also include infrared sensors, by the way.

We see a lot of potential in combining sensing and communication.

As generative AI becomes even more widespread, we’ll need to extract even more information from anywhere through sensing technology. We may see new value generated and new solutions to social problems.

Kajitani: That’s where LLMs are evolving toward, right?

As LLMs become multimodal, we’ll see use cases for data from infrared sensors.

Off the top of my head, we can analyze the flow of people through stations, or analyze usage trends in shops.

Video data is heavy and carries privacy risks, but infrared sensors would provide sufficient data while protecting privacy. It’s a practical use of the technology’s unique characteristics.

Masuda: Thank you for saying so. We’re gathering up those sorts of ideas right now and thinking about how they might be put into practice in society.

In the future, we may be moving into an era when generative AI is so widespread that it becomes integral to us as human beings.

When that happens, our communication semiconductor devices and infrared sensors will be more indispensable to society than ever.

We are dedicated to providing better products and solving social issues to make that world a reality.

*The information on this page is current as of April, 2024.

Author: Yusuke Aoyama

Photography: Masashi Kuroha

Design: Risa Yoshiyama

Editor: Akira Shimomoto

Translator: Jeff Moore